Seedance 2.0

Explore Seedance 2.0 Generation Features

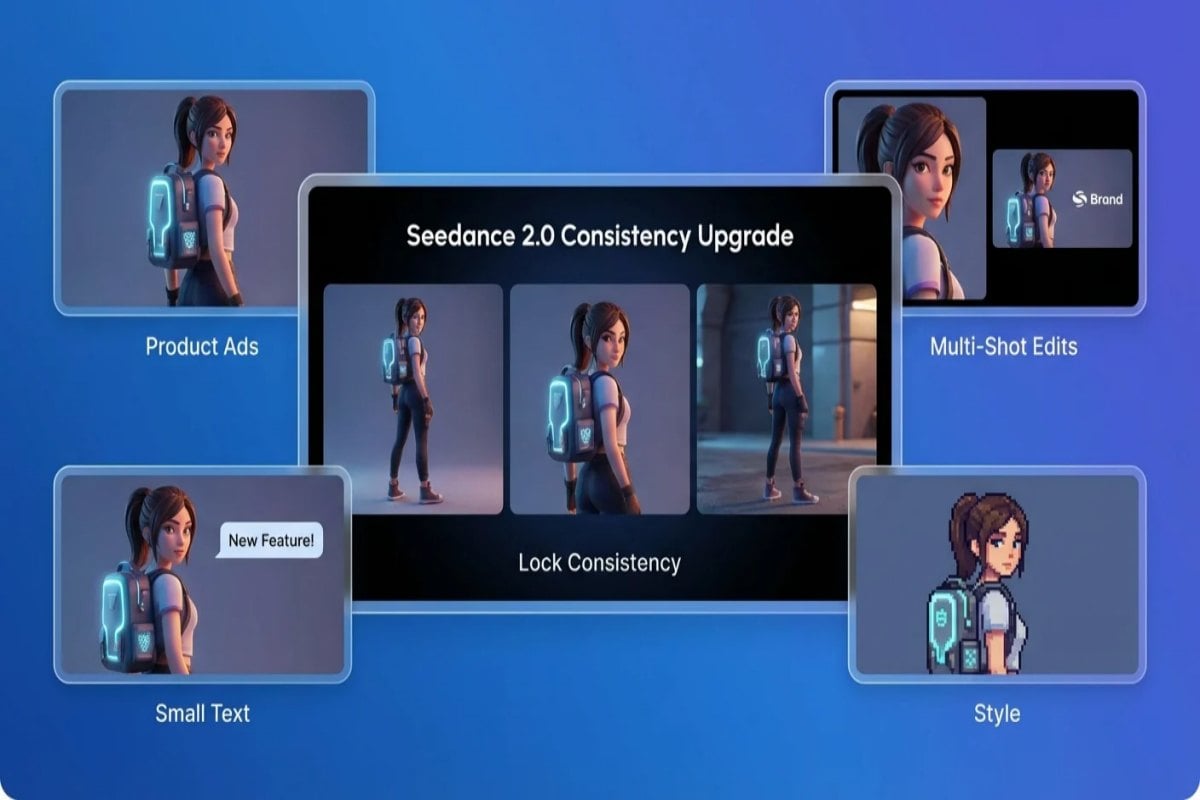

Consistency That Stays Put: Faces, Products, Small Text, and Style

The most frustrating failures in video generation usually aren’t about beauty—they’re about drift: a character’s face changes, product details vanish, tiny text turns to mush, scenes jump, or the visual style suddenly shifts mid-sequence. Seedance 2.0 is built to hold those anchors more reliably—from facial features and wardrobe to materials, logos, and typography—so multi-shot work feels steadier and more usable.

Ideal for product ads, character-driven shorts, multi-shot edits, text-forward scenes, and any workflow where continuity matters.

Truer Voices, Better Audio

Seedance 2.0 doesn’t just aim for better frames—it aims for more believable sound. Voices land closer to the intended character, with more natural phrasing and emotional dynamics, while music and ambience can sit in the scene without that obvious “template” feel. The result is audio that supports the performance instead of distracting from it.

Use it for talk-to-camera clips, dialogue scenes, narration, comedic banter, and music-led edits where timing and tone carry the story.

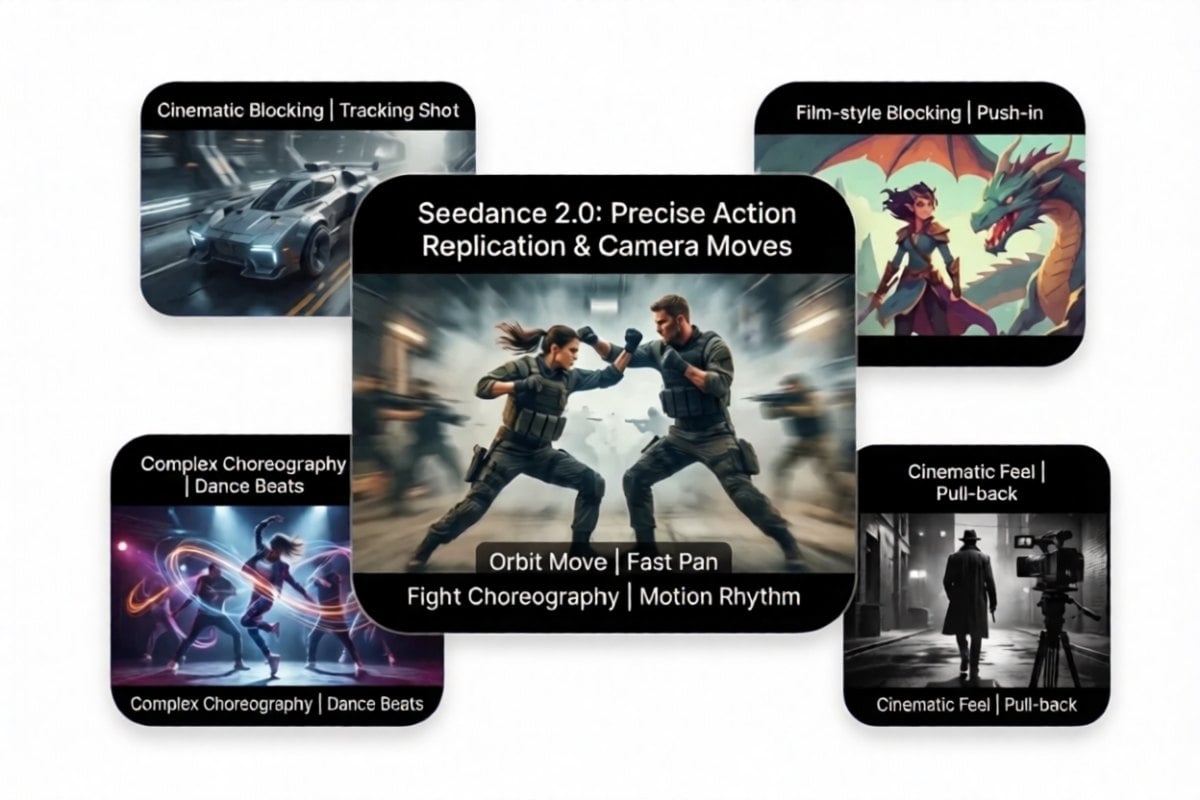

Precise Camera + Action Replication: Film-Style Blocking Made Practical

In the past, getting a model to mimic cinematic blocking, camera language, or complex choreography meant writing a wall of prompt details—or giving up. With Seedance 2.0, a single reference clip can do the heavy lifting: motion rhythm, camera moves, and action cadence stay closer to the source. Just state what to follow and what to change, and you’ll get shots that feel directed instead of “accidental.”

Great for tracking shots, push-ins and pull-backs, orbit moves, fast pans, fight choreography, dance beats, and any moment where you want to recreate a specific cinematic feel.

Audio-Visual Sync That Holds Up in Real Dialogue

Make dialogue scenes feel intentional: pacing, micro-pauses, and on-screen motion stay aligned, so shots don’t slip into that familiar “AI dub” vibe. Seedance 2.0 keeps performance readable—mouth shapes, facial tension, and small gestures track the delivery instead of drifting mid-shot.

Use it for talk-to-camera clips, explainers, character monologues, and any scene where believability comes from rhythm and timing—not just pretty frames.

Cinematic Motion, Cleaner Cuts, Less “AI Weirdness”

Seedance 2.0 is built for creators who want fewer takes, fewer patches, and more first-pass usable shots—especially when you’re working with multi-shot pacing and cinematic beats.

If you’re comparing the Seedance lineup, jump into our AI video generator and see how Seedance 2.0 relates to Seedance 1.5 Pro, Seedance Pro, and Seedance Lite—so you can pick the right balance of quality, speed, and control for your workflow.

Key Features of Seedance 2.0

- More Believable Performance

- Precise Camera + Action Replication

- Creative Templates, Plus Complex Effects That Duplicate Cleanly

- Stronger Creativity + Story Completion

- Consistency That Stays Put

- Extend and Continue Without a Hard Reset

- More Natural Motion + Audio That Feels Truer

- Seedance 2.0 vs Seedance 1.5 Pro

More Believable Performance

| Prompt | Generated Video |

|---|---|

A cinematic close-up of a presenter in a softly lit studio. The camera slowly pushes in as they deliver a short, emotional line. Their facial micro-expressions change naturally with the pacing, and the mouth movement stays consistent across the full shot. |

Precise Camera + Action Replication

| Prompt | Reference Video | Generated Video |

|---|---|---|

Use **@Image 1** as the main subject (the female celebrity). Follow **@Video 1** for the camera style with rhythmic push-ins, pull-backs, pans, and moves. The celebrity’s performance should also follow the dance actions from the woman in **@Video 1**, delivering an energetic, lively stage show. |

Creative Templates, Plus Complex Effects That Duplicate Cleanly

| Prompt | Reference Image | Reference Video | Generated Video |

|---|---|---|---|

Ink-wash black-and-white style. Use the character in @Image 1 as the main subject, and follow @Video 1 for the effects and movements to perform an ink-painting Tai Chi kung fu sequence. |  |

Stronger Creativity + Story Completion

| Prompt | Reference Image | Reference Video | Generated Video |

|---|---|---|---|

A cinematic close-up of a presenter in a softly lit studio. The camera slowly pushes in as they deliver a short, emotional line. Their facial micro-expressions change naturally with the pacing, and the mouth movement stays consistent across the full shot. |  |

Consistency That Stays Put

| Prompt | Reference Video | Generated Video |

|---|---|---|

Replace the woman in **@Video 1** with a **traditional Chinese opera *huadan*** character. Set the scene on an elegant, ornate stage. Follow @Video 1 for camera movement and transitions, using the camera to closely match the performer’s actions. Aim for a refined, theatrical aesthetic with strong visual impact. |

Extend and Continue Without a Hard Reset

| Prompt | Generated Video |

|---|---|

Extend @Video 1 by 15 seconds: from 0–5s, light and shadow pass through window blinds and slowly glide across a wooden table and the surface of a cup while tree branches outside sway gently like a soft breath; from 6–10s, a single coffee bean floats down from the top of the frame and the camera pushes in toward it until the image fades to black; from 11–15s, English text gradually appears in three lines. |

More Natural Motion + Audio That Feels Truer

Seedance 2.0 vs Seedance 1.5 Pro

| Feature | Seedance 2.0 | Seedance 1.5 Pro |

|---|---|---|

| What you'll feel first | A mature baseline with strong sync and solid stability across everyday dialogue scenes. | More film-like pacing and performance nuance for dialogue-heavy scenes |

| Prompt interpretation | Reliable for straightforward prompts and quick iterations. | More consistent with camera-language prompts and shot intent |

| Motion quality | Reliable motion for general scenes | Cleaner motion arcs and fewer odd micro-jitters in close shots |

| Performance consistency | Good identity stability in short clips | Steadier facial detail and less expression drift across a shot |

| Best use case | Best for: fast social drafts, simple explainers | Best for: cinematic promos, scripted talk-to-camera, story beats |

| Where it sits | Previously released generation | Seedance 2.0 generation |

Seedance 2.0 Parameters

More Latest Video Models

Features of the Seedance 2.0 Video Model

Audio-Visual Coherence First

Stabler Frames & Fewer Artifacts

Better Cinematic Prompt Control

Flexible Formats for Real Work

More Expressive Acting

Faster Iteration Loop

Discussion on Twitter

You may want to know

What is Seedance 2.0?

Why is Seedance 2.0 different from other AI video tools?

Why does audio-visual alignment matter so much for AI video?

How do I prompt Seedance 2.0 for cinematic results?

What are the best use cases for Seedance 2.0?

Seedance 2.0 vs Seedance 1.5 Pro: which should I pick?

How can I reduce artifacts and improve consistency in my video?

Up to what duration can Seedance 2.0 generate a video?

What inputs can I use with Seedance 2.0 (text, image, video, audio)?

How do I use reference videos without over-constraining the result?

How do I maintain character consistency across multiple shots?

How do I get cleaner camera moves (push-in, pan, orbit) and avoid jitter?

Can Seedance 2.0 replicate complex transitions or template-style edits?

How can I add more seconds to a clip without breaking continuity?

Can I edit an existing video instead of generating from scratch?

How do I avoid text issues like blurry signage or unreadable labels?

How should I handle sensitive or restricted real-person face inputs?

What’s a good workflow for production teams (consistency + speed)?

Try Seedance 2.0 Now

Turn prompts or images into cinematic clips with clearer motion, steadier scenes, and more natural performance.

Start Creating