How to Animate a Photo with Sora 2? Ultimate Guide 2025

- Why prompt intent matters more than adjectives

- A three-step workflow aligned to “animate a picture”

- Example prompt scaffolds (copy, tweak, ship)

- Planning grid: from single shot to micro-story

- Quality & safety checklist (fast but thorough)

- Why teams use GoEnhance AI for this workflow

- Troubleshooting: quick causes and fixes

- Conclusion

Turning a single still into expressive, camera-smart motion is now a practical workflow, not a research demo. This article shows how to build a dependable pipeline around Sora 2, why “prompt intent” is the lever that determines realism and pacing, and how GoEnhance AI stitches the pieces together so a portrait or product image becomes a short that looks intentional—not synthetic.

Why prompt intent matters more than adjectives

Good outputs start with precise, film-literate inputs. Instead of stacking descriptors, write prompts that encode who/what, where, how the camera behaves, and how time flows. Use this structure as a checklist:

- Subject & mood: who is on screen, micro-expression, wardrobe, texture.

- Environment & light: time of day, key/fill/rim, contrast, haze.

- Camera & motion: shot size, lens feel, path (push/slide/parallax), speed, easing in/out.

- Physics & details: cloth/hair micro-motion, steam, reflections, depth of field.

- Output spec: aspect (3:2/9:16), duration (8–12 s works well), cadence (begin still → breathe → gentle hold).

Two practical rules:

- Constrain motion amplitude. “2–3° head tilt” beats “slight turn.”

- Make time explicit. Stating how the shot starts and ends gives the model a stable path.

A three-step workflow aligned to “animate a picture”

Step 1 — Establish a tasteful base move

Start inside GoEnhance AI with animate a picture. Upload a sharp portrait or product photo and dial in subtle motion: natural blink cadence, shallow rack focus, a gentle push-in or parallax. The goal is to wake the still without warping geometry.

Step 2 — Enrich with model-aware realism

Send that base to the model stage and apply your structured intent. Keep it concise and physical—define light, camera, and micro-actions. Sora is strongest when it interprets an environment rather than fakes big moves.

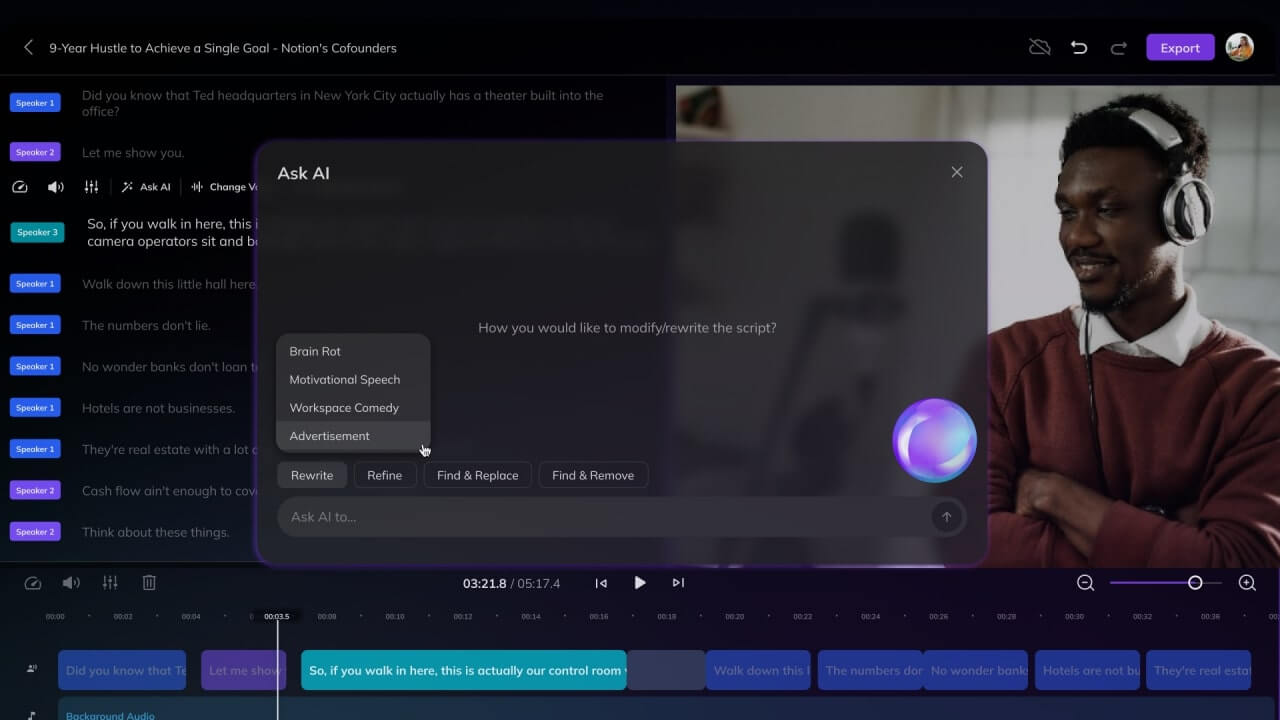

Step 3 — Finish and package for platforms

Back in GoEnhance AI, clean edges with 4K upscale and frame interpolation, trim to beat, add a caption or logo, and export. If you’re assembling multiple animated stills into a sequence, move into the editor or the main AI video generator to align color, typography, and pacing across shots. For series work, the image to video path helps maintain consistent lens feel and motion magnitude from clip to clip.

Example prompt scaffolds (copy, tweak, ship)

Editorial portrait (8–10 s, 3:2)

“Medium close-up of a young professional by a café window; warm late-afternoon rim light; soft blink and micro-smile; gentle 50 mm push-in; hair and jacket fibers move subtly; cup steam drifting; shallow depth of field; begin on still, breathe, end on soft hold.”

Product beauty (8–12 s, 3:2)

“Matte black earbuds on walnut desk under skylight; delicate reflections; slow right-to-left parallax; shallow DoF; brief rack focus to logo; end on logo sharp, motion easing out.”

Governance note: platforms are converging on AI-content disclosure. For watermarks and provenance, see DeepMind’s SynthID overview (reference) and YouTube’s disclosure guidance (reference). For licensing basics, the WIPO knowledge pages are a solid primer (reference).

Planning grid: from single shot to micro-story

| Beat | Visual goal | Motion notes | On-screen text |

|---|---|---|---|

| 1. Establish | Subject in context | 5–10% push-in, calm ease-in | “Meet Ava” |

| 2. Reveal | Gesture or product detail | Parallax + light rack focus | “New in matte black” |

| 3. Hold | Confident still | Ease-out, minimal drift | Logo + short CTA |

Keep total runtime tight (20–30 s for three beats). If your still was composed for landscape, preserve 3:2; for vertical, design framing up front to avoid aggressive crops.

Quality & safety checklist (fast but thorough)

- Faces first. Watch eye corners and nasolabial lines; if they wobble, shorten duration or reduce amplitude.

- Type & logos. Avoid fast lateral motion when text appears; consider compositing the logo as a UI layer for razor-sharp edges.

- Light continuity. Match key direction and contrast if you chain multiple shots.

- Rights & attribution. Confirm image licenses and model/property releases; archive settings and renders for auditability.

- Labels. Include “AI-generated” or platform-specific markers where required.

Why teams use GoEnhance AI for this workflow

- Camera-smart presets keep geometry stable while adding life (push, slide, parallax, “breathing” loops).

- High-fidelity finishing with 4K upscaling and frame interpolation yields crisp edges and smooth micro-actions.

- Shot consistency at scale via batch aspect/duration/LUT settings—ideal for campaign variants.

- An editor built for shorts, with captions, safe-area guides, beat markers, and clean export profiles.

- Governance-friendly: watermark passthrough and export notes align with emerging disclosure norms.

GoEnhance AI’s value isn’t just the model stage—it’s the repeatability: a pipeline your team can run daily without quality swings.

Troubleshooting: quick causes and fixes

| Symptom | Likely cause | Quick fix |

|---|---|---|

| Mouth or eyes deform on motion | Motion amplitude too high; duration too long | Shorten to 8–10 s; reduce head-turn to ≤3° |

| Logo edges look soft | Scaling or compression during motion | Composite logo in editor; upscale then downscale |

| Parallax feels “floaty” | Background depth cues missing | Add subtle rack focus; limit lateral drift |

| Flicker on fine textures | Over-sharpened source or grain | Soften grain slightly; upscale before interpolation |

Conclusion

Animating a photo isn’t a parlor trick—it’s a compact filmmaking exercise: light, lens, and time, distilled. With Sora’s depth-aware interpretation and GoEnhance AI’s finishing tools, a single image can carry a complete beat that feels intentional and brand-safe. Build your shot with a clear prompt intent, establish a subtle base move, let the model enrich realism, then package it cleanly for the feed. The result is motion that respects the original photograph—and earns its place in your content calendar.